At this year’s NVIDIA GTC conference for AI innovators, technologists, and creatives, the company announced the launch of the RTX A5000 and RTX A4000 graphics processing units (GPUs), joining the RTX A6000 in their new line of Ampere graphics cards. Let’s a take a look at what this means and why it is important.

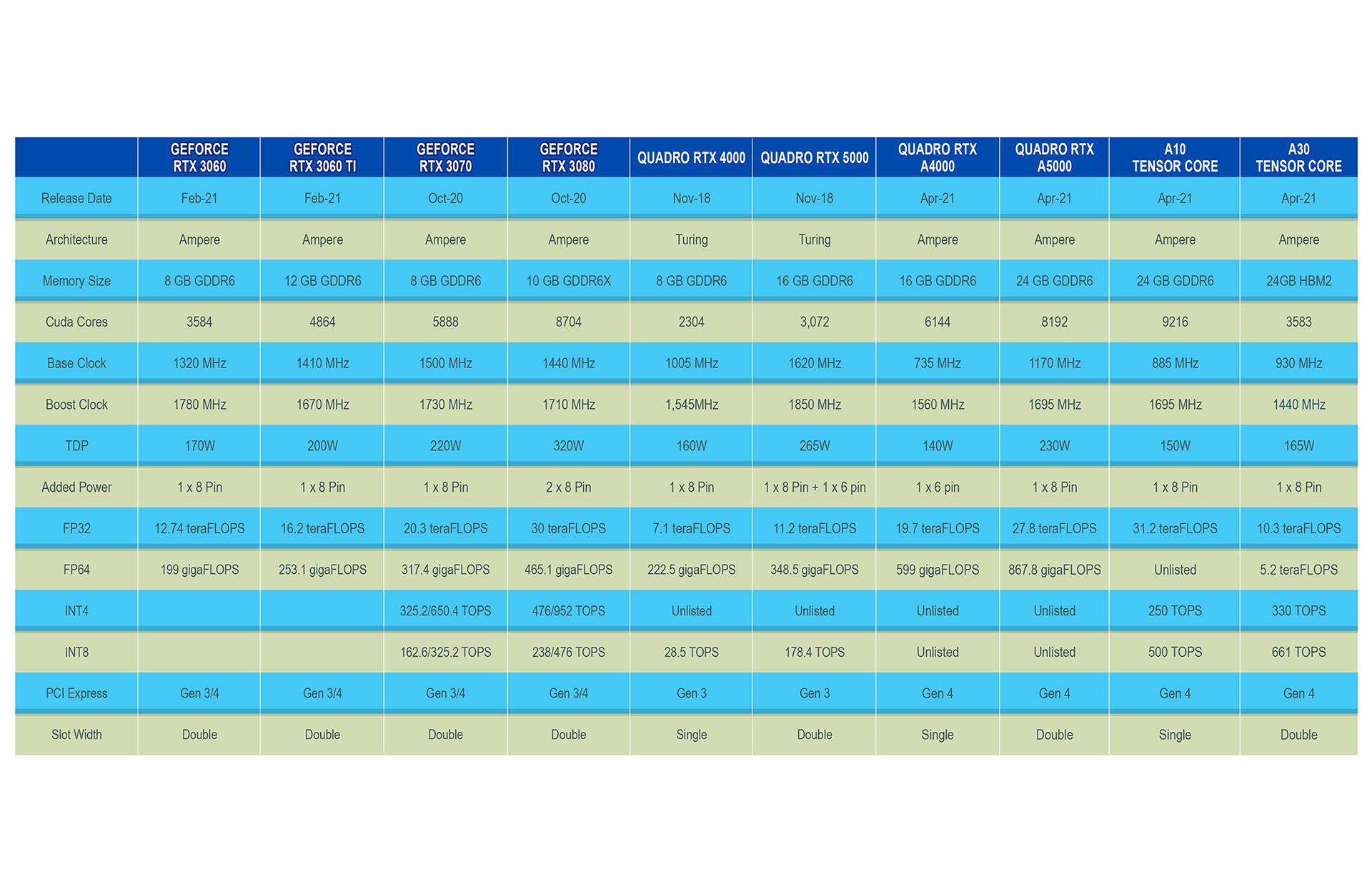

In short, NVIDIA has upgraded these cards just about across the board. Just check out the table above for the full breakdown. For example, previously called the Quadro RTX 4000, the RTX A4000 offers 16 GB GDDR6 memory, while the RTX A5000 takes it further with 24 GB GDDR6. Both GPUs offer more CUDA cores than previous Quadro and GeForce models, with the RTX A4000 featuring 6144 and the RTX A5000 8192. Additionally, the RTX A4000 has 40 RT cores while the RTX A500 has 48. Another major upgrade for the two cards is memory bandwidth, as both models improve upon the 264 GB/s of the RTX A3000 — RTX A4000 with 384 GB/s and RTX A5000 with 448 GB/s.

“GPUs have become significantly important in the automation space.”

NVIDIA says the new GPUs integrate second-generation RT cores “to further boost ray tracing, and third-generation Tensor cores to accelerate AI-powered workflows such as rendering denoising, deep learning super sampling, and generative design.”

As industrial applications such as edge AI/deep learning, 3D laser profiling, and vision-guided robotics evolve and require increasingly faster and more powerful processors, GPUs have become significantly important in the automation space. These new NVIDIA models represent yet another step forward in that progression. In fact, NVIDIA recently announced that its new AI inference platform achieved record-setting performance.

Raising the Bar for AI Inference

The newly expanded AI inference platform — featuring NVIDIA A30 and A10 GPUs — reached new performance heights in every category on the latest release of MLPerf. MLPerf is the industry’s established benchmark for measuring AI performance across workloads such as computer vision, medical imaging, recommender systems, speech recognition, and natural language processing.

“AI inference capabilities continue to improve, which will allow companies in industrial automation and beyond to adapt and expand to ever-growing conditions and requirements.”

NVIDIA says its system achieved the results by taking advantage of the NVIDIA AI platform, which includes a range of GPUs and AI software, including TensorRT and NVIDIA Triton Inference Server. NVIDIA was the only company that submitted results for every test in the data center and edge categories, delivering top performance results across all MLPerf workloads, according to the company. Additionally, the company used its Ampere architecture’s Multi-Instance GPU capability by simultaneously running all seven MLPerf offline tests on a single GPU using seven MIG instances. The configuration showed nearly identical performance compared with a single MIG instance running alone.

While the results are a nice feather in the cap for NVIDIA, they highlight the fact that AI inference capabilities continue to improve, which will allow companies in industrial automation and beyond to adapt and expand to ever-growing conditions and requirements. As this happens, CoastIPC will be ready to provide the marketplace with customized PCs that either feature GPUs or offer expandability for the graphics cards, as well as other AI-enhancing hardware such as TPUs and VPUs. Reach out today with any questions you may have on GPU computing needs: [email protected].